There are many AI-powered products, which are available today, continue to grow very quickly. Recent statistics show that the market of AI apps will reach over 2 billion U.S. dollars by 2025 in terms of their potential to provide innovative and productive solutions to complex modern challenges people face. While they offer a lot of benefits, at the same time they come with a whole set of unique issues.

With that in mind, development teams should not only create well-functioning AI-powered products but also carefully test them so that they adhere to ethical standards while delivering the best results and a great user experience possible. However, testing AI applications is a cumbersome process that requires a comprehensive understanding of the AI-based app’s architecture and built AI model into its functionality.

Testing AI Applications: What Are The Reasons?

Nowadays, more and more organizations have started the integration of AI and advanced features into their products. They encountered that AI-based software is not as predictable as traditional software. When teams build traditional software, which is designed to follow specific and rule-based logic, they do get a fixed and predicted result. But the situation differs if they work on artificial intelligence software, because it is more complex, dynamic, and unpredictable. Even when they test AI applications, it is mandatory for each team member to understand how the artificial intelligence model behaves, how it processes data and to check its ethical and accurate functionality. To sum up,

QA engineers need to:

✅ Guarantee that the artificial intelligence system is able to complete tasks in the right way.

✅ Check how well an artificial intelligence application performs under different types of conditions, how fast it provides responses, etc.

✅ Validate how successfully an artificial intelligence app functions when teams compare AI-generated results against expected outcomes to identify errors.

✅ Examine whether the AI-based system is biased from the start and in terms of it makes unfair decisions.

Principles of AI For Software Testing

- Transparency. When you develop an AI project, you need to understand why AI produces specific results, how an AI-based model makes decisions, and what data have been used for those purposes. It will help build trust and adhere to ethical standards.

- Human-In-the-Loop (HIL). By incorporating feedback from human AI experts, it enables them to refine the AI’s algorithms. Once content is flagged by the AI, human moderators review what has been flagged to confirm whether it is correct or not. It improves AI’s ability to handle complex or uncertain situations and make more accurate decisions.

- Fairness and Unbias. Ethical implications like bias and fairness should be taken into account during the entire testing process. When an AI-based model delivers unfair results systematically, you should update your data, provide regular audits, review AI-driven algorithms, AI decisions, etc. to guarantee that the use of the AI system aligns with ethical standards.

- Accuracy and Reliability. Accuracy refers to an AI system’s ability to produce correct results while reliability means delivering predictable outcomes across various scenarios.

- Scalability and Performance. Artificial intelligence models should keep performance efficient when it comes to processing larger sets of data and more complicated tasks in terms of scaling. So, assessing the model’s ability to process increasing workloads without loss of speed or accuracy is essential in this case.

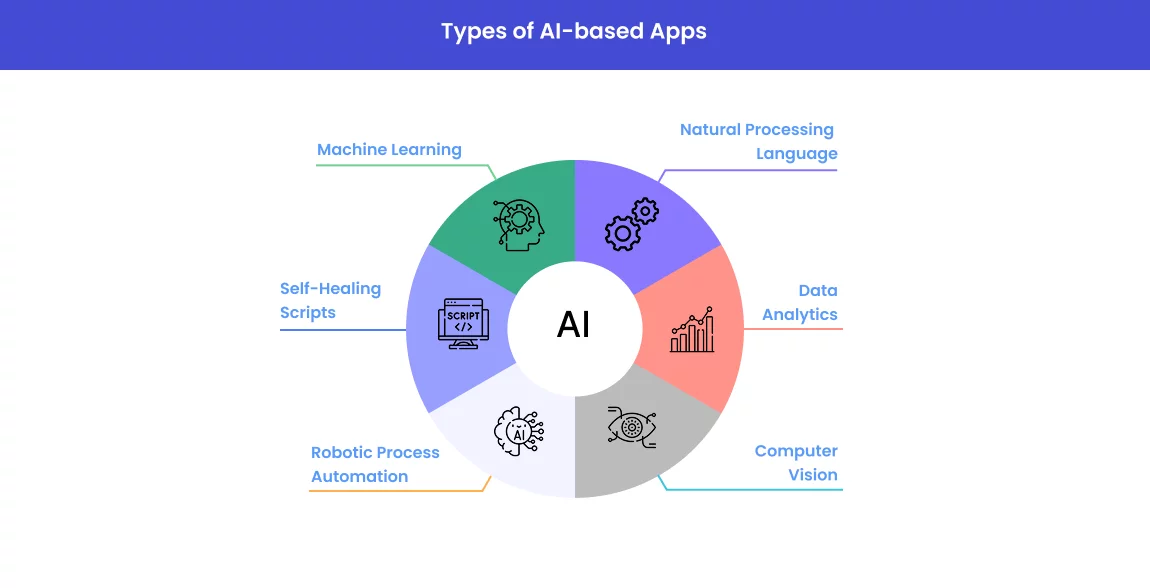

Types of AI-based Apps and Their Testing Needs

Quality assurance is important for all AI-based models and systems, such as:

Let’s break them down in detail next, keep reading 👀

#1: Machine learning

Algorithms that can learn from data – by training on a large dataset, they identify patterns and make predictions instead of being specifically designed to perform certain tasks. These ML software require testing in order to:

- Concentrate on the model’s ability to predict outcomes without mistakes based on labeled data.

- Assess the model’s capability of finding hidden patterns or groupings in unlabeled data.

- Validate how well the model learns a strategy to achieve maximal reward through repeated attempts.

#2: Deep learning

A kind of artificial intelligence model that is typically trained on large datasets of labeled data. The algorithms learn to associate features in the data with the correct labels and need testing in order to:

- Make sure that the model fulfills its purpose on previously unseen data.

- Detect whether the model has learned random data instead of underlying patterns.

- Assess resource utilization during training and inference.

#3: Natural language processing

NLP models, which process information based on patterns between the components of speech (letters, words, sentences, etc.), are developed to understand natural language as humans do. They are tested in order to:

- Identify whether they produce accurate results after they process human language.

- Define whether they are able to keep context in tasks such as translation or summarization.

- Identify whether the models are able to reveal human feelings, sentiments, and emotions, which are hidden behind a text or interaction.

#4: Computer vision

Algorithms, which are powered by computer vision technology, allow devices to identify and classify objects and people in images and videos. They are tested to:

- Check if the algorithms correctly identify and classify images/videos, which have been processed.

- Validate if the algorithms are able to accurately locate and identify multiple objects within an image/video.

- Evaluate if the algorithms achieve consistent results even when they process under challenging conditions like occlusions, motion blur, poor lighting, and across different backgrounds.

#5: Generative AI Models

These models are trained on large datasets to uncover underlying patterns and learn how data is structured to generate new content – text, image, audio, video, and code. Testing generative AI applications is crucial to:

- Assess generated content based on criteria such as fluency, creativity, relevance, etc.

- To generate unique, logically correct, and diverse outputs.

- Not to generate harmful or biased content.

#6: Robotic process automation

This is the software that is powered with artificial intelligence technologies to derive information from vision sensors for further segmenting and understanding scenes as well as detecting and classifying objects. In this case, they should be tested for:

- Verifying that the robot completes intended tasks, even in different environments and scenarios, successfully.

- Measuring the robot’s efficiency, speed, productivity, and accuracy when they perform tasks as well as meet safety standards, relevant laws, and regulations.

- Optimize the robot’s algorithms, fixing errors that lead to equipment or environmental damage.

Key Factors To Consider While Testing AI Applications

When testing AI-based solutions, it’s important to take into account the following factors:

- Input/Output Data Balance. You should remember that not only input data but also intended outputs are essential when you test an artificial intelligence app. The task is to help the model handle real-world scenarios and deliver error-free outputs by using small data volumes and making changes in accordance with produced outputs, and only then to expand datasets.

- Training Data. You should use training data to learn an AI model from historical data to formulate the decision-making capabilities. Do not forget to review outcomes and adjust the model to improve accuracy.

- Testing Data. These data sets are logically designed to test all possible combinations and determine how well the trained models produce a desired or intended result. With the growing number of iterations and data, the model should be refined.

- System Validation Test Suites. With algorithms and test data sets, you create system validation test suites to test models to make sure that they are free of problems.

- Reporting Test Findings. QA team members must specify confidence criteria between specified ranges when validating algorithms. Just because range-based accuracy or confidence scores work for artificial intelligence algorithms better instead of test results presented in statistics.

Testing Strategies To Use When Testing AI Products

When developing and testing artificial intelligence products, it is essential to avoid unnecessary problems. Here, a well-planned and implemented testing strategy can be helpful. It looks like a plan that describes the process of testing and includes information about objectives, the scope of testing including information about test data generation, test creation, test execution, test results, test maintenance, edge cases, testing methodologies and approaches, software test automation tools, and so on. Below, we are going to overview approaches that are critical for testing AI applications:

| Data-centric testing | Model-centric testing | Deployment-centric testing |

|

|

|

There are three approaches that can be used during the AI lifecycle:

- Data-centric testing. This approach is applied to test the quality of the raw data used to train and assess AI models by checking the completeness, accuracy, consistency, and validity of the data, detecting biases and drift.

- Model-centric testing. This approach is utilized when the task is to test the overall quality of the AI-based product, and where performance testing, metamorphic testing, robustness testing, and explainability testing are used.

- Deployment-centric testing. This approach is applied to test the quality of the delivery of AI-based products through scalability testing, latency testing, security testing, and A/B testing.

Also, it is important to mention that you need to carry out automation testing to test AI-based app functionality that is powered by an AI model and use the following set of test cases:

- With unit tests, teams can check the functionality of the app and some components of the AI-driven model.

- By using integration tests, teams can check how data flows between the app and the model work together.

- When applying performance tests, teams can evaluate the app’s and AI model’s speed, and efficiency, as well as reveal information about how resources are utilized.

- With e2e tests, teams can create simulations of user workflows and evaluate the overall system performance.

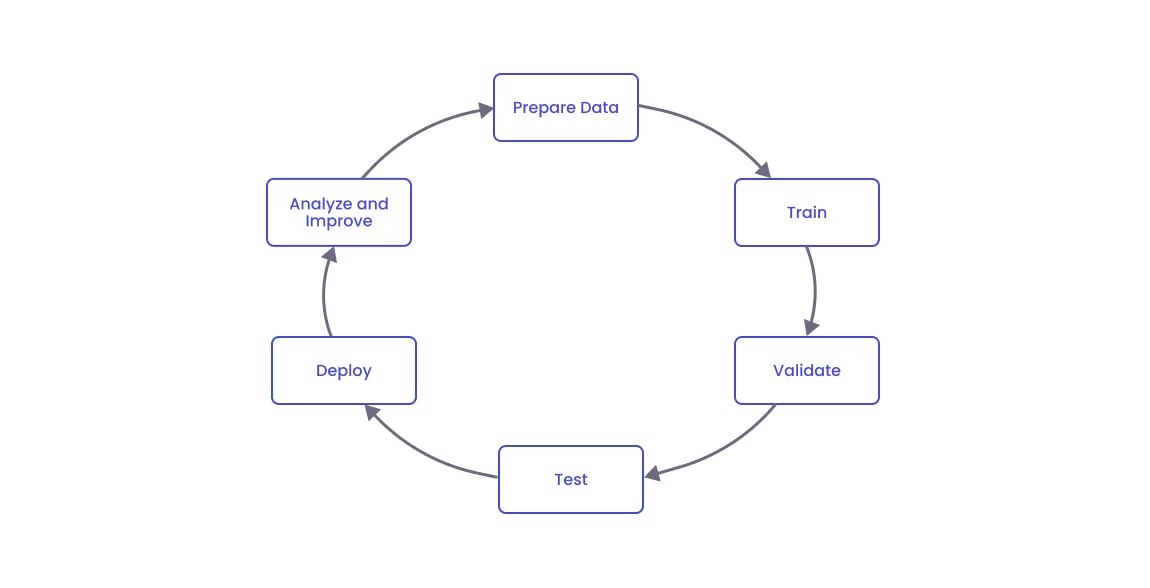

How To Test AI Applications: Key Steps

When we talk about carrying out effective testing for AI-based solutions, it is important to mention that the functionality of these products is built around an artificial intelligence model that processes data and generates insights. This means that you should combine traditional test automation approaches with AI-specific techniques. The following steps are helpful for testing AI applications and their model, which is integrated into the application’s architecture, to make sure it provides the best results possible for end users.

Step 1: Prepare Data For Testing AI Applications

Before starting to test, it is important to collect and assess the quality of the data, which will be used for training/testing purposes. You need to remove all inaccuracies and inconsistencies from the data and organize it into a format which AI algorithms can understand and interpret correctly. Furthermore, you need to remember that if the data contains errors, is incomplete, or has biases, the AI-based system will deliver the wrong results, and it will not be able to complete the necessary tasks or imitate human behavior.

So, you need to check for missing or incorrect values in the data, make sure that the data covers all possible scenarios (e.g., different age groups, genders, regions, etc.), and divide the data for different processes – training, validation, and testing which help you avoid overfitting.

Step 2: Incorporate Training

Once you have your data, you can use it to train the AI model based on carefully selected data and allow it to learn and start making predictions or decisions. If artificial intelligence models make mistakes at this early stage of learning, you should correct them to improve the model’s accuracy.

Step 3: Focus on AI Validation

At this step, you need to validate the accuracy of the AI model. To do so, you need to have a separate dataset for validation, which is broader and more complex than the training one. When validating, you can uncover any security issues or gaps in the model’s ability, which are difficult to do with training data. In addition to accuracy, you can check how often the model makes correct predictions as well as accurately classifies.

Step 4: Perform AI Testing

Only after the data has been validated, you can start the testing process in order to evaluate the artificial intelligence model against different scenarios and use cases. Development and QA teams, which assess the model’s performance, simulating real-world conditions that help them detect potential challenges/limitations and solve them so that the artificial intelligence model will provide trustworthy and unbiased results. However, in case the model does not offer the desired accuracy, it must go through the training stage again.

Step 5: Deploy

Once the model has been tested, it is time for deployment into the intended infrastructure – on-premise or cloud environment. In the live environment, the model will provide recommendations and make predictions, which may happen. When deploying the model, teams should take into account the scalability, reliability, and security of the infrastructure in advance, because the chosen environment for deployment should be able to handle the expected workload and user interactions as well as keep the data protected.

Step 6: Analyze and Improve

At this stage, it is critical to analyze various aspects of the behavior of the artificial intelligence model – errors in accuracy, instability of the data, and wrong decisions or predictions: all these impact the results. By closely monitoring the performance of the artificial intelligence model, you can use a combination of statistical analysis, automated monitoring systems, and periodic reviews/feedback to detect potential issues and take appropriate steps to make changes in the algorithms. When applying continuous testing, feedback, and defect analysis, teams can launch powerful AI models, which not only meet the specific needs of the project or application but also produce results faster and with more precision.

Challenges in Testing AI Applications

When it comes to testing AI applications, it may present several challenges that can impact their effectiveness and reliability. Key issues include:

Unrealistic Expectations

When testing AI/ML-driven projects, there is a possibility of facing requirements which may be unclear. Teams which create traditional software projects start with clear and well-defined requirements. Otherwise, requirements for AI/ML products might be unrealistic and processes that need AI impact are not identified, leading to failures in terms of unclear goals of what AI-based app aims to achieve.

Data Imbalance and Bias

When teams work on AI-based projects, they may deal with biased results and inaccurate predictions, because AI models may be trained on imbalanced datasets. That’s why it is crucial to uncover imbalanced datasets and to stop dealing with them by carefully collecting and preprocessing data. To solve this problem, teams can apply under-sampling, over-sampling, and synthetic data generation techniques to improve the performance of the model.

Interpretability Issues

Teams, which test AI software, face the complexities of AI algorithms and model decision-making processes. They find it difficult to discover how AI makes predictions and decisions as well as its ability to perceive or recognize complex patterns. If the process isn’t transparent, the situation really poses challenges to the ability of the model to adhere to regulatory standards. Thus, teams should incorporate explainable artificial intelligence techniques (SHAP, LIME), which can help them enhance interpretability and provide insights into model behavior.

Absence of Established Testing Standards

Without universally accepted tools for AI model testing in the AI-QA ecosystem, teams have problems with inconsistencies when they evaluate and validate models. In addition to that, they don’t have established standards for data formats, workflow integration, etc. Incompatibility between tools for AI app testing and existing QA frameworks makes the AI software development process more complicated.

Lack of Resources

To test AI models, it requires major initial investments, which include computing power, training the team or hiring new specialists. When models scale and grow in complexity, they are trained and tested on larger datasets which means it demands significant computational resources. As a result, you need to make sure that teams are equipped with high performance computing infrastructure, which helps them better manage these demands and not worry about the models’ ability to scale more effectively.

AI Software Testing Tools and Frameworks

Testing AI applications requires a blend of manual effort and specialized AI automation testing approaches. Below, you can find some special AI and software testing tools:

- TensorFlow Extended (TFX). Designed by Google, this open-source platform allows teams to manage machine learning models in production environments as well as speed up ML workflow, from data collection and pre-processing to model training and deployment.

- IBM Watson OpenScale. Being an open environment, it allows teams to manage the AI lifecycle and test AI/ML models with reduced test cycle times. It also offers options for monitoring AI/ML models on the cloud or anywhere else where they might be deployed to guarantee their accuracy and fairness.

- PyTorch. Built on Python, it is an open-source machine learning framework that includes libraries for testing AI-driven models and assessing their performance in different types of data, like images, text, and audio.

- DataRobot. It is an automated machine learning platform that supports various languages and allows teams to develop, deliver, and monitor AI models. The platform is also useful if ML models scale and develop.

- Apache MXNet. Having an easy-to-use interface, this open-source deep learning framework allows teams to define, train, and deploy deep neural networks across a diverse range of devices.

Tips for Better Testing AI Products

- Teams should understand from the start the problems and goals that should be solved with the development of an AI-based project.

- Teams should be attentive during the process of data collection, data evaluation, and test case creation to make sure the data is clear and relevant.

- Teams should retrain and recheck AI-driven models once new data have been incorporated or updated or task requirements have been changed in order to keep the models relevant and effective.

- Teams should have enough data for testing AI applications to produce more accurate results.

- Teams should follow regulations like GDPR to meet strict data standards and avoid bias and misuse.

🤔 What About Testing AI Applications to Stand Out From The Crowd?

There’s no doubt that any business can reap the benefits of AI. But investing in AI-powered systems is not enough – you should thoroughly test them and improve test coverage.

Also, remember that testing AI software is an ongoing process, which evolves with technological advancements. With that in view, you need to regularly test AI-based products to carefully assess if they are transparent, unbiased, highly reliable, and accurate.

Only by implementing the best tips, using modern software testing AI tools and test automation platforms can you create AI-based products that address the challenges and requirements of today’s digital world.

👉 Don’t hesitate to contact our specialists if you have any questions about testing AI applications.